The still photos and movies accessed by the user through the Photos app constitute the photo library. Your app can give the user an interface for exploring this library, similar to the Photos app, through the UIImagePickerController class.

In addition, the Assets Library framework lets you access the photo library and its contents programmatically. You’ll need to link to AssetsLibrary.framework and import <AssetsLibrary/AssetsLibrary.h>.

The UIImagePickerController class can also be used to give the user an interface similar to the Camera app, letting the user take photos and videos on devices with the necessary hardware.

At a deeper level, AV Foundation (Chapter 28) provides direct control over the camera hardware. You’ll need to link to AVFoundation.framework (and probably CoreMedia.framework as well), and import <AVFoundation/AVFoundation.h>

To use constants such as kUTTypeImage, referred to in this chapter, your app must link to MobileCoreServices.framework and import <MobileCoreServices/MobileCoreServices.h>.

UIImagePickerController is a view controller (UINavigationController) whose view provides a navigation interface, similar to the Photos app, in which the user can choose an item from the photo library. Alternatively, it can provide an interface, similar to the Camera app, for taking a video or still photo if the necessary hardware is present.

How you display the UIImagePickerController depends on what kind of device this is:

- On the iPhone

- You will typically display the view controller’s view as a presented view controller. This presented view controller will appear in portrait orientation, regardless of your app’s rotation settings.

- On the iPad

- If you’re letting the user choose an item from the photo library, you’ll show it in a popover; attempting to display it as a presented view controller causes a runtime exception. (To see how to structure your universal app code, look at Example 29.1). But if you’re letting the user take a video or still photo, you’ll probably treat UIImagePickerController as a presented view controller on the iPad, just as on the iPhone.

To let the user choose an item from the photo library, instantiate UIImagePickerController and assign its sourceType one of these values:

-

UIImagePickerControllerSourceTypeSavedPhotosAlbum - The user is confined to the contents of the Camera Roll / Saved Photos album.

-

UIImagePickerControllerSourceTypePhotoLibrary - The user is shown a table of all albums, and can navigate into any of them.

You should call the class method isSourceTypeAvailable: beforehand; if it doesn’t return YES, don’t present the controller with that source type.

You’ll probably want to specify an array of mediaTypes you’re interested in. This array will usually contain kUTTypeImage, kUTTypeMovie, or both; or you can specify all available types by calling the class method availableMediaTypesForSourceType:.

After doing all of that, and having supplied a delegate (adopting UIImagePickerControllerDelegate and UINavigationControllerDelegate), present the view controller:

UIImagePickerControllerSourceType type =

UIImagePickerControllerSourceTypePhotoLibrary;

BOOL ok = [UIImagePickerController isSourceTypeAvailable:type];

if (!ok) {

NSLog(@"alas");

return;

}

UIImagePickerController* picker = [UIImagePickerController new];

picker.sourceType = type;

picker.mediaTypes =

[UIImagePickerController availableMediaTypesForSourceType:type];

picker.delegate = self;

[self presentViewController:picker animated:YES completion:nil]; // iPhone

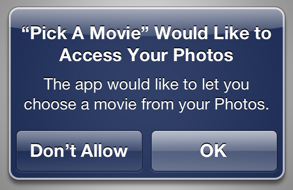

New in iOS 6, the very first time you do this, a system alert will appear, prompting the user to grant your app permission to access the photo library (Figure 30.1). You can modify the body of this alert by setting the “Privacy — Photo Library Usage Description” key (NSPhotoLibraryUsageDescription) in your app’s Info.plist to tell the user why you want to access the photo library. This is a kind of “elevator pitch”; you need to persuade the user in very few words.

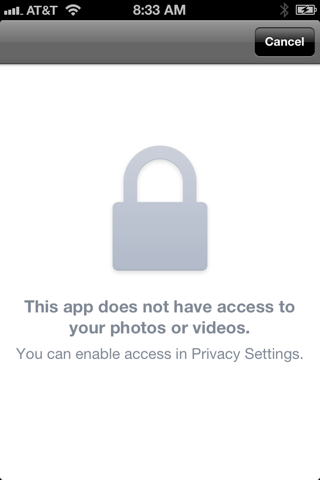

If the user denies your app access, you’ll still be able to present the UIImagePickerController, but it will be empty (with a reminder that the user has denied your app access to the photo library) and the user won’t be able to do anything but cancel (Figure 30.2). Thus, your code is unaffected. You can check beforehand to learn whether your app has access to the photo library — I’ll explain how later in this chapter — and opt to do something other than present the UIImagePickerController if access has been denied; but you don’t have to, because the user will see a coherent interface, and your app will proceed normally afterwards, thinking that the user has cancelled from the picker.

Note

To retest the system alert and other access-related behaviors, go to the Settings app and choose General → Reset → Reset Location & Privacy. This causes the system to forget that it has ever asked about access for any app.

Warning

If the user does what Figure 30.2 suggests, switching to the Settings app and enabling access for your app under Privacy → Photos, your app will crash in the background! This is unfortunate, but is probably not a bug; Apple presumably feels that in this situation your app cannot continue coherently and should start over from scratch.

On the iPhone, the delegate will receive one of these messages:

-

imagePickerController:didFinishPickingMediaWithInfo: -

imagePickerControllerDidCancel:

On the iPad, there’s no Cancel button, so there’s no imagePickerControllerDidCancel:; you can detect the dismissal of the popover through the popover delegate. On the iPhone, if a UIImagePickerControllerDelegate method is not implemented, the view controller is dismissed automatically; but rather than relying on this, you should implement both delegate methods and dismiss the view controller yourself in each one.

The didFinish... method is handed a dictionary of information about the chosen item. The keys in this dictionary depend on the media type.

- An image

-

The keys are:

-

UIImagePickerControllerMediaType -

A UTI; probably

@"public.image", which is the same askUTTypeImage. -

UIImagePickerControllerOriginalImage - A UIImage.

-

UIImagePickerControllerReferenceURL - An ALAsset URL (discussed later in this chapter).

-

- A movie

-

The keys are:

-

UIImagePickerControllerMediaType -

A UTI; probably

@"public.movie", which is the same askUTTypeMovie. -

UIImagePickerControllerMediaURL - A file URL to a copy of the movie saved into a temporary directory. This would be suitable, for example, to display the movie with an MPMoviePlayerController (Chapter 28).

-

UIImagePickerControllerReferenceURL - An ALAsset URL (discussed later in this chapter).

-

Optionally, you can set the view controller’s allowsEditing to YES. In the case of an image, the interface then allows the user to scale the image up and to move it so as to be cropped by a preset rectangle; the dictionary will include two additional keys:

-

UIImagePickerControllerCropRect - An NSValue wrapping a CGRect.

-

UIImagePickerControllerEditedImage - A UIImage.

In the case of a movie, if the view controller’s allowsEditing is YES, the user can trim the movie just as with a UIVideoEditorController (Chapter 28). The dictionary keys are the same as before, but the file URL points to the trimmed copy in the temporary directory.

Because of restrictions on how many movies can play at once (see There Can Be Only One in Chapter 28), if you use a UIImagePickerController to let the user choose a movie and you then want to play that movie in an MPMoviePlayerController, you must destroy the UIImagePickerController first. How you do this depends on how you displayed the UIImagePickerController. If you’re using a presented view controller on the iPhone, you can use the completion handler to ensure that the MPMoviePlayerController isn’t configured until after the animation dismissing the presented view:

-(void)imagePickerController:(UIImagePickerController *)picker

didFinishPickingMediaWithInfo:(NSDictionary *)info {

NSURL* url = info[UIImagePickerControllerMediaURL];

[self dismissViewControllerAnimated:YES completion:^{

if (url)

[self showMovie:url];

}];

}

If you’re using a popover on the iPad, you can release the UIPopoverController (probably by nilifying the instance variable that’s retaining it) after dismissing the popover without animation; even then, I find, it is necessary to add a delay before trying to show the movie:

-(void)imagePickerController:(UIImagePickerController *)picker

didFinishPickingMediaWithInfo:(NSDictionary *)info {

NSURL* url = info[UIImagePickerControllerMediaURL];

[self.currentPop dismissPopoverAnimated:NO]; // must be NO!

self.currentPop = nil;

if (url) {

[CATransaction setCompletionBlock:^{

[self showMovie:url];

}];

}

}

To prompt the user to take a photo or video in an interface similar to the Camera app, first check isSourceTypeAvailable: for UIImagePickerControllerSourceTypeCamera; it will be NO if the user’s device has no camera or the camera is unavailable. If it is YES, call availableMediaTypesForSourceType: to learn whether the user can take a still photo (kUTTypeImage), a video (kUTTypeMovie), or both. Now instantiate UIImagePickerController, set its source type to UIImagePickerControllerSourceTypeCamera, and set its mediaTypes in accordance with which types you just learned are available. Finally, set a delegate (adopting UINavigationControllerDelegate and UIImagePickerControllerDelegate), and present the view controller. In this situation, it is legal (and preferable) to use a presented view controller even on the iPad.

For video, you can also specify the videoQuality and videoMaximumDuration. Moreover, these additional properties and class methods allow you to discover the camera capabilities:

-

isCameraDeviceAvailable: -

Checks to see whether the front or rear camera is available, using one of these parameters:

-

UIImagePickerControllerCameraDeviceFront -

UIImagePickerControllerCameraDeviceRear

-

-

cameraDevice - Lets you learn and set which camera is being used.

-

availableCaptureModesForCameraDevice: -

Checks whether the given camera can capture still images, video, or both. You specify the front or rear camera; returns an NSArray of NSNumbers, from which you can extract the integer value. Possible modes are:

-

UIImagePickerControllerCameraCaptureModePhoto -

UIImagePickerControllerCameraCaptureModeVideo

-

-

cameraCaptureMode - Lets you learn and set the capture mode (still or video).

-

isFlashAvailableForCameraDevice: - Checks whether flash is available.

-

cameraFlashMode -

Lets you learn and set the flash mode (or, for a movie, toggles the LED “torch”). Your choices are:

-

UIImagePickerControllerCameraFlashModeOff -

UIImagePickerControllerCameraFlashModeAuto -

UIImagePickerControllerCameraFlashModeOn

-

Warning

Setting camera-related properties such as cameraDevice when there is no camera or when the UIImagePickerController is not set to camera mode can crash your app.

When the view controller appears, the user will see the interface for taking a picture, familiar from the Camera app, possibly including flash button, camera selection button, and digital zoom (if the hardware supports these), still/video switch (if your mediaTypes setting allows both), and Cancel and Shutter buttons. If the user takes a picture, the presented view offers an opportunity to use the picture or to retake it.

Allowing the user to edit the captured image or movie, and handling the outcome with the delegate messages, is the same as I described in the previous section. There won’t be any UIImagePickerControllerReferenceURL key in the dictionary delivered to the delegate, because the image isn’t in the photo library. A still image might report a UIImagePickerControllerMediaMetadata key containing the metadata for the photo. The photo library was not involved in the process of media capture, so no user permission to access the photo library is needed; of course, if you now propose to save the media into the photo library (as described later in this chapter), you will need permission.

Here’s a very simple example in which we offer the user a chance to take a still image; if the user does so, we insert the image into our interface in a UIImageView (iv):

- (IBAction)doTake:(id)sender {

BOOL ok = [UIImagePickerController isSourceTypeAvailable:

UIImagePickerControllerSourceTypeCamera];

if (!ok) {

NSLog(@"no camera");

return;

}

NSArray* arr = [UIImagePickerController availableMediaTypesForSourceType:

UIImagePickerControllerSourceTypeCamera];

if ([arr indexOfObject:(NSString*)kUTTypeImage] == NSNotFound) {

NSLog(@"no stills");

return;

}

UIImagePickerController* picker = [UIImagePickerController new];

picker.sourceType = UIImagePickerControllerSourceTypeCamera;

picker.mediaTypes = @[kUTTypeImage];

picker.delegate = self;

[self presentViewController:picker animated:YES completion:nil];

}

- (void)imagePickerControllerDidCancel:(UIImagePickerController *)picker {

[self dismissViewControllerAnimated:YES completion:nil];

}

- (void)imagePickerController:(UIImagePickerController *)picker

didFinishPickingMediaWithInfo:(NSDictionary *)info {

UIImage* im = info[UIImagePickerControllerOriginalImage];

if (im)

self.iv.image = im;

[self dismissViewControllerAnimated:YES completion:nil];

}

In the image capture interface, you can hide the standard controls by setting showsCameraControls to NO, replacing them with your own overlay view, which you supply as the value of the cameraOverlayView. In this case, you’re probably going to want some means in your overlay view to allow the user to take a picture! You can do that through these methods:

-

takePicture -

startVideoCapture -

stopVideoCapture

You can supply a cameraOverlayView even if you don’t set showsCameraControls to NO; but in that case you’ll need to negotiate the position of your added controls if you don’t want them to cover the existing controls.

The key to customizing the look and behavior of the image capture interface is that a UIImagePickerController is a UINavigationController; the controls shown at the bottom of the default interface are the navigation controller’s toolbar. In this example, I’ll remove all the default controls and allow the user to double-tap the image in order to take a picture:

// ... starts out as before ...

picker.delegate = self;

picker.showsCameraControls = NO;

CGRect f = self.view.window.bounds;

UIView* v = [[UIView alloc] initWithFrame:f];

UITapGestureRecognizer* t =

[[UITapGestureRecognizer alloc] initWithTarget:self

action:@selector(tap:)];

t.numberOfTapsRequired = 2;

[v addGestureRecognizer:t];

picker.cameraOverlayView = v;

[self presentViewController:picker animated:YES completion:nil];

self.picker = picker;

// ...

- (void) tap: (id) g {

[self.picker takePicture];

}

The interface is marred by a blank area the size of the toolbar at the bottom of the screen, below the preview image. What are we to do about this? You can zoom or otherwise transform the preview image by setting the cameraViewTransform property; but this can be tricky, because different versions of iOS apply your transform differently, and in any case it’s hard to know what values to use. An easier solution is to put your own view where the blank area will appear; that way, the blank area looks deliberate, not blank:

CGFloat h = 53;

UIView* v = [[UIView alloc] initWithFrame:f];

UIView* v2 =

[[UIView alloc] initWithFrame:

CGRectMake(0,f.size.height-h,f.size.width,h)];

v2.backgroundColor = [UIColor redColor];

[v addSubview: v2];

UILabel* lab = [UILabel new];

lab.text = @"Double tap to take a picture";

lab.backgroundColor = [UIColor clearColor];

[lab sizeToFit];

lab.center = CGPointMake(CGRectGetMidX(v2.bounds), CGRectGetMidY(v2.bounds));

[v2 addSubview:lab];

Another approach is to take advantage of the fact that, because we are the UIImagePickerController’s delegate, we are not only its UIImagePickerControllerDelegate but also its UINavigationControllerDelegate. We can therefore get some control over the navigation controller’s interface, and populate its root view controller’s toolbar — but only if we wait until the root view controller’s view actually appears. Here, I’ll increase the height of the toolbar to ensure that it covers the blank area, and put a Cancel button into it:

- (void)navigationController:(UINavigationController *)nc

didShowViewController:(UIViewController *)vc

animated:(BOOL)animated {

[nc setToolbarHidden:NO];

CGRect f = nc.toolbar.frame;

CGFloat h = 56; // determined experimentally

CGFloat diff = h - f.size.height;

f.size.height = h;

f.origin.y -= diff;

nc.toolbar.frame = f;

UIBarButtonItem* b =

[[UIBarButtonItem alloc] initWithTitle:@"Cancel"

style:UIBarButtonItemStyleBordered

target:self

action:@selector(doCancel:)];

UILabel* lab = [UILabel new];

lab.text = @"Double tap to take a picture";

lab.backgroundColor = [UIColor clearColor];

[lab sizeToFit];

UIBarButtonItem* b2 = [[UIBarButtonItem alloc] initWithCustomView:lab];

[nc.topViewController setToolbarItems:@[b, b2]];

}

When the user double-taps to take a picture, our didFinishPickingMediaWithInfo delegate method is called, just as before. We don’t automatically get the secondary interface where the user is shown the resulting image and offered an opportunity to use it or retake the image. But we can provide such an interface ourselves, by pushing another view controller onto the navigation controller:

- (void)imagePickerController:(UIImagePickerController *)picker

didFinishPickingMediaWithInfo:(NSDictionary *)info {

UIImage* im = info[UIImagePickerControllerOriginalImage];

if (!im)

return;

SecondViewController* svc =

[[SecondViewController alloc] initWithNibName:nil bundle:nil image:im];

[picker pushViewController:svc animated:YES];

}

(Designing the SecondViewController class is left as an exercise for the reader.)

Instead of using UIImagePickerController, you can control the camera and capture images using the AV Foundation framework (Chapter 28). You get no help with interface (except for displaying in your interface what the camera “sees”), but you get far more detailed control than UIImagePickerController can give you; for example, for stills, you can control focus and exposure directly and independently, and for video, you can determine the quality, size, and frame rate of the resulting movie. You can also capture audio, of course.

The heart of all AV Foundation capture operations is an AVCaptureSession object. You configure this and provide it as desired with inputs (such as a camera) and outputs (such as a file); then you call startRunning to begin the actual capture. You can reconfigure an AVCaptureSession, possibly adding or removing an input or output, while it is running — indeed, doing so is far more efficient than stopping the session and starting it again — but you should wrap your configuration changes in beginConfiguration and commitConfiguration.

As a rock-bottom example, let’s start by displaying in our interface, in real time, what the camera sees. This requires an AVCaptureVideoPreviewLayer, a CALayer subclass. This layer is not an AVCaptureSession output; rather, the layer receives its imagery by owning the AVCaptureSession:

self.sess = [AVCaptureSession new];

AVCaptureDevice* cam =

[AVCaptureDevice defaultDeviceWithMediaType:AVMediaTypeVideo];

AVCaptureDeviceInput* input =

[AVCaptureDeviceInput deviceInputWithDevice:cam error:nil];

[self.sess addInput:input];

AVCaptureVideoPreviewLayer* lay =

[[AVCaptureVideoPreviewLayer alloc] initWithSession:self.sess];

lay.frame = CGRectMake(10,30,300,300);

[self.view.layer addSublayer:lay];

self.previewLayer = lay; // keep a reference so we can remove it later

[self.sess startRunning];

Presto! Our interface now contains a window on the world, so to speak. Next, let’s permit the user to snap a still photo, which our interface will display instead of the real-time view of what the camera sees. As a first step, we’ll need to revise what happens as we create our AVCaptureSession in the previous code. Since this image is to go directly into our interface, we won’t need the full eight megapixel size of which the iPhone 4 and 5 cameras are capable, so we’ll configure our AVCaptureSession’s sessionPreset to ask for a much smaller image. We’ll also provide an output for our AVCaptureSession, an AVCaptureStillImageOutput, setting its outputSettings to specify the quality of the JPEG image we’re after:

self.sess = [AVCaptureSession new];

self.sess.sessionPreset = AVCaptureSessionPreset640x480;

self.snapper = [AVCaptureStillImageOutput new];

self.snapper.outputSettings =

@{AVVideoCodecKey: AVVideoCodecJPEG, AVVideoQualityKey:@0.6};

[self.sess addOutput:self.snapper];

// ... and the rest is as before ...

When the user asks to snap a picture, we send captureStillImageAsynchronouslyFromConnection:completionHandler: to our AVCaptureStillImageOutput object. This call requires some preparation. The first argument is an AVCaptureConnection; to find it, we ask the output for its connection that is currently inputting video. The second argument is the block that will be called, possibly on a background thread, when the image data is ready; in the block, we capture the data into a UIImage and, moving onto the main thread (Chapter 38), we construct in the interface a UIImageView containing that image, in place of the AVCaptureVideoPreviewLayer we were displaying previously:

AVCaptureConnection *vc =

[self.snapper connectionWithMediaType:AVMediaTypeVideo];

typedef void(^MyBufBlock)(CMSampleBufferRef, NSError*);

MyBufBlock h = ^(CMSampleBufferRef buf, NSError *err) {

NSData* data =

[AVCaptureStillImageOutput jpegStillImageNSDataRepresentation:buf];

UIImage* im = [UIImage imageWithData:data];

dispatch_async(dispatch_get_main_queue(), ^{

UIImageView* iv =

[[UIImageView alloc] initWithFrame:CGRectMake(10,30,300,300)];

iv.contentMode = UIViewContentModeScaleAspectFit;

iv.image = im;

[self.view addSubview: iv];

[self.previewLayer removeFromSuperlayer];

self.previewLayer = nil;

[self.sess stopRunning];

});

};

[self.snapper captureStillImageAsynchronouslyFromConnection:vc

completionHandler:h];

Our code has not illustrated setting the focus, changing the flash settings, and so forth; doing so is not difficult (see the class documentation on AVCaptureDevice), but note that you should wrap such changes in calls to lockForConfiguration: and unlockForConfiguration. You can turn on the LED “torch” by setting the back camera’s torchMode to AVCaptureTorchModeOn, even if no AVCaptureSession is running.

New in iOS 6, you can stop the flow of video data by setting the AVCaptureConnection’s enabled to NO, and there are some other new AVCaptureConnection features, mostly involving stabilization of the video image (not relevant to the example, because a preview layer’s video isn’t stabilized). Plus, AVCaptureVideoPreviewLayer now provides methods for converting between layer coordinates and capture device coordinates; previously, this was a very difficult problem to solve.

AV Foundation’s control over the camera, and its ability to process incoming data — especially video data — goes far deeper than there is room to discuss here, so consult the documentation; in particular, see the “Media Capture” chapter of the AV Foundation Programming Guide, and the AV Foundation Release Notes for iOS 5 also contains some useful (and still relevant) hints. There are also excellent WWDC videos on AV Foundation, and some fine sample code; I found Apple’s AVCam example very helpful while preparing this discussion.

The Assets Library framework does for the photo library roughly what the Media Player framework does for the music library (Chapter 29), letting your code explore the library’s contents. One obvious use of the Assets Library framework might be to implement your own interface for letting the user choose an image, in a way that transcends the limitations of UIImagePickerController. But you can go further with the photo library than you can with the media library: you can save media into the Camera Roll / Saved Photos album, and you can even create a new album and save media into it.

A photo or video in the photo library is an ALAsset. Like a media entity (Chapter 29), an ALAsset can describe itself through key–value pairs called properties. (This use of the word “properties” has nothing to do with the Objective-C properties discussed in Chapter 12.) For example, it can report its type (photo or video), its creation date, its orientation if it is a photo whose metadata contains this information, and its duration if it is a video. You fetch a property value with valueForProperty:. The properties have names like ALAssetPropertyType.

A photo can provide multiple representations (roughly, image file formats). A given photo ALAsset lists these representations as one of its properties, ALAssetPropertyRepresentations, an array of strings giving the UTIs identifying the file formats; a typical UTI might be @"public.jpeg" (kUTTypeJPEG, if you’ve linked to MobileCoreServices.framework). A representation is an ALAssetRepresentation. You can get a photo’s defaultRepresentation, or ask for a particular representation by submitting a file format’s UTI to representationForUTI:.

Once you have an ALAssetRepresentation, you can interrogate it to get the actual image, either as raw data or as a CGImage (see Chapter 15). The simplest way is to ask for its fullResolutionImage or its fullScreenImage (the latter is more suitable for display in your interface, and is identical to what the Photos app displays); you may then want to derive a UIImage from this using imageWithCGImage:scale:orientation:. The original scale and orientation of the image are available as the ALAssetRepresentation’s scale and orientation. Alternatively, if all you need is a small version of the image to display in your interface, you can ask the ALAsset itself for its aspectRatioThumbnail. An ALAssetRepresentation also has a url, which is the unique identifier for the ALAsset.

The photo library itself is an ALAssetsLibrary instance. It is divided into groups (ALAssetsGroup), which have types. For example, the user might have multiple albums; each of these is a group of type ALAssetsGroupAlbum. You also have access to the PhotoStream album. An ALAssetsGroup has properties, such as a name, which you can fetch with valueForProperty:; one such property, the group’s URL (ALAssetsGroupPropertyURL), is its unique identifier. To fetch assets from the library, you either fetch one specific asset by providing its URL, or you can start with a group, in which case you can then enumerate the group’s assets. To obtain a group, you can enumerate the library’s groups of a certain type, in which case you are handed each group as an ALAssetsGroup, or you can provide a particular group’s URL. Before enumerating a group’s assets, you may optionally filter the group using a simple ALAssetsFilter; this limits any subsequent enumeration to photos only, videos only, or both.

The Assets Library framework uses Objective-C blocks for fetching and enumerating assets and groups. These blocks behave in a special way: at the end of the enumeration, they are called one extra time with a nil first parameter. Thus, you must code your block carefully to avoid treating the first parameter as real on that final call. Formerly, I was mystified by this curious block enumeration behavior, but one day the reason for it came to me in a flash: these blocks are all called asynchronously (on the main thread), meaning that the rest of your code has already finished running, so you’re given an extra pass through the block as your first opportunity to do something with the data you’ve gathered in the previous passes.

As I mentioned earlier in this chapter, the system will ask the user for permission the first time your app tries to access the photo library, and the user can refuse. You can learn directly beforehand whether access has already been enabled:

ALAuthorizationStatus stat = [ALAssetsLibrary authorizationStatus];

if (stat == ALAuthorizationStatusDenied ||

stat == ALAuthorizationStatusRestricted) {

// in real life, we could put up interface asking for access

NSLog(@"%@", @"No access");

return;

}

There is, however, no need to do this, because all the block-based methods for accessing the library allow you to supply a failure block; thus, your code will be able to retreat in good order when it discovers that it can’t access the library.

We now know enough for an example! I’ll fetch the first photo from the album named “mattBestVertical” in my photo library and stick it into a UIImageView in the interface. For readability, I’ve set up the blocks in my code separately as variables before they are used, so it will help to read backward: we enumerate (at the end of the code) using the getGroups block (previously defined), which itself enumerates using the getPix block (defined before that). We must also be prepared with a block that handles the possibility of an error. Here we go:

// what I'll do with the assets from the group

ALAssetsGroupEnumerationResultsBlock getPix =

^ (ALAsset *result, NSUInteger index, BOOL *stop) {

if (!result)

return;

ALAssetRepresentation* rep = [result defaultRepresentation];

CGImageRef im = [rep fullScreenImage];

UIImage* im2 =

[UIImage imageWithCGImage:im scale:0

orientation:(UIImageOrientation)rep.orientation];

self.iv.image = im2; // put image into our UIImageView

*stop = YES; // got first image, all done

};

// what I'll do with the groups from the library

ALAssetsLibraryGroupsEnumerationResultsBlock getGroups =

^ (ALAssetsGroup *group, BOOL *stop) {

if (!group)

return;

NSString* title = [group valueForProperty: ALAssetsGroupPropertyName];

if ([title isEqualToString: @"mattBestVertical"]) {

[group enumerateAssetsUsingBlock:getPix];

*stop = YES; // got target group, all done

}

};

// might not be able to access library at all

ALAssetsLibraryAccessFailureBlock oops = ^ (NSError *error) {

NSLog(@"oops! %@", [error localizedDescription]);

// e.g., "Global denied access"

};

// and here we go with the actual enumeration!

ALAssetsLibrary* library = [ALAssetsLibrary new];

[library enumerateGroupsWithTypes: ALAssetsGroupAlbum

usingBlock: getGroups

failureBlock: oops];

You can write files into the Camera Roll / Saved Photos album. The basic function for writing an image file to this location is UIImageWriteToSavedPhotosAlbum. Some kinds of video file can also be saved here; in an example in Chapter 28, I checked whether this was true of a certain video file by calling UIVideoAtPathIsCompatibleWithSavedPhotosAlbum, and I saved the file by calling UISaveVideoAtPathToSavedPhotosAlbum.

The ALAssetsLibrary class extends these abilities by providing five additional methods:

-

writeImageToSavedPhotosAlbum:orientation:completionBlock: - Takes a CGImageRef and orientation.

-

writeImageToSavedPhotosAlbum:metadata:completionBlock: -

Takes a CGImageRef and optional metadata dictionary (such as might arrive through the

UIImagePickerControllerMediaMetadatakey when the user takes a picture using UIImagePickerController). -

writeImageDataToSavedPhotosAlbum:metadata:completionBlock: - Takes raw image data (NSData) and optional metadata.

-

videoAtPathIsCompatibleWithSavedPhotosAlbum: - Takes a file path string. Returns a boolean.

-

writeVideoAtPathToSavedPhotosAlbum:completionBlock: - Takes a file path string.

Saving takes time, so a completion block allows you to be notified when it’s over. The completion block supplies two parameters: an NSURL and an NSError. If the first parameter is not nil, the write succeeded, and this is the URL of the resulting ALAsset. If the first parameter is nil, the write failed, and the second parameter describes the error.

You can create in the Camera Roll / Saved Photos album an image or video that is considered to be a modified version of an existing image or video, by calling an instance method on the original asset:

-

writeModifiedImageDataToSavedPhotosAlbum:metadata:completionBlock: -

writeModifiedVideoAtPathToSavedPhotosAlbum:completionBlock:

Afterwards, you can get from the modified asset to the original asset through the former’s originalAsset property.

You are also allowed to “edit” an asset — that is, you can replace an image or video in the library with a different image or video — but only if your application created the asset. Check the asset’s editable property; if it is YES, you can call either of these methods:

-

setImageData:metadata:completionBlock: -

setVideoAtPath:completionBlock:

Finally, you are allowed to create an album:

-

addAssetsGroupAlbumWithName:resultBlock:failureBlock:

If an album is editable, which would be because you created it, you can add an existing asset to it by calling addAsset:. This is not the same thing as saving a new asset to an album other than the Camera Roll / Saved Photos album; you can’t do that, but once an asset exists, it can belong to more than one album.